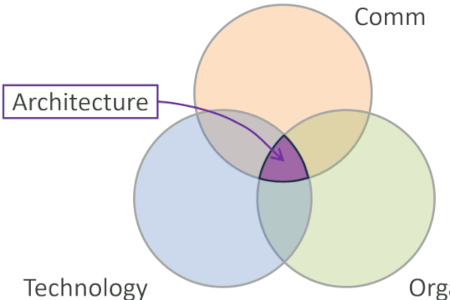

Updated: Category: Architecture

Flying on empty

Air Transat Flight 236 was on route from Toronto to Lisbon, Portugal, when the pilots noticed low oil temperature and high oil pressure on engine #2. Half an hour later, they were alerted of a fuel imbalance, which they corrected by transferring fuel from one tank to the other. Unbeknown to them, both symptoms were caused by a common cause: a fuel leak near engine #2. Modern jet engines use a fuel-oil heat exchanger (FOHE), which cools the oil and warms the fuel before entering the engine. The increased fuel flow from the leak caused excessive oil cooling and thus increased pressure.

Pumping fuel from the “good” tank into the leaking tank caused the plane to run out of fuel 65 miles from the closest air base on the Azores. Amazingly, the pilots managed to glide and land the plane without any major injuries (Wikipedia). The plane also survived and has been nick-named “Azores Glider”.

The pilots could have diagnosed the problem if they had known the inner workings of the FOHE and its effects. However, the enormous complexity of the aircraft has largely been abstracted away from them and replaced by a set of dials, levers, and wheels. These abstractions made flying much safer and also eliminated the need for a dedicated flight engineer. However, in a failure scenario like the fuel leak, ignoring the underlying complexity can be dangerous.

Searching for Alpha particles

Another great example of failures not wanting to respect important abstractions comes from Google’s early days, when the search index, Google’s greatest asset, delivered wrong results. After an intense debugging session that yielded no result, two of Google’s greatest minds, Jeff Dean and Sanjay Ghemawat, “went deeper”. By looking at data in binary format, they ultimately found that the state of some memory chips had been corrupted, perhaps by errant alpha particles (whole story in the New Yorker and also referenced in Jeff Dean’s presentation). The brilliant software engineers, who tried to debug before, relied on the abstractions of operating systems and programming languages and hence were unable to find the bug in the system.

Salting the Coffee

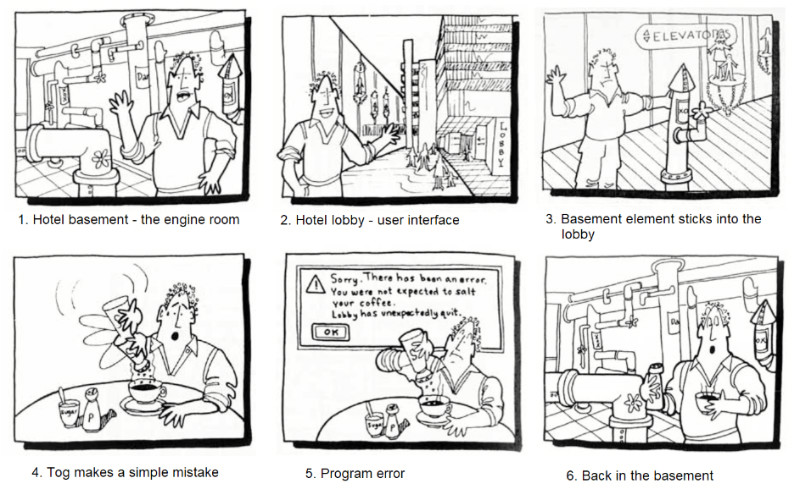

But not all examples need to be deep-down technical. A user interface classic is Tog on Interface by Bruce “Tog” Tognazzini, published back in 1992.

The book contains a short story about abstractions that leak on failure. It speaks about how “Tog” visits the impressive machinery in a fancy hotel’s basement where all the heating, laundry and other functions reside. He described it as “Nothing very natural here. This is a lot like the interfaces we used to present our users: dangerous, confusing, confining. “ However, normally he would be in the fancy lobby, which has a much nicer user interface: easy to follow signage, nice posh carpets,a bit of artwork here and there.

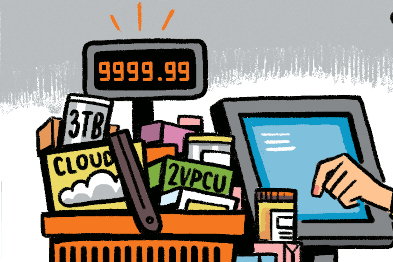

Every once in a while, computer interfaces make us stumble over some detail from the basement. Translated into the world of hotels, to the user this feels like a pipe sticking out from the basement into the lobby. IT examples are manifold, ranging from “you are running low on storage, some features may be unavailable” to “a newer version of your app is available, please update to a more recent version”.

Things get worse, though, in the hotel story when Tog makes a seemingly innocent mistake. Instead of sugar, he accidentally puts salt into his coffee. His slip of attention is quickly followed by a message stating that “Lobby has unexpectedly quit” and he finds himself back in the basement, still holding his coffee cup, but utterly perplexed.

In this case the failure didn’t come from the lower layers, but from an unexpected action in the upper layers of abstraction. The result is similar: suddenly the abstraction no longer holds. Nowadays we would likely call this the Blue Screen of Death.

Abstraction is a tool, not a replacement

So while abstraction seems to work well in the happy day scenario, it quickly breaks apart in case of errors. Does this invalidate the benefits of abstraction? Of course not. It does mean, however, that, like any other tool, you’ll have to know when you can rely on the tool and when you’ll have to try something else. It’s like the old hammer that’ll make too many problems look like a nail.

It also means that when you deal with complex systems and failure scenarios, that you Can’t manage what you cant understand - sometimes you have to open the hood. While not every developers needs to know the innards of the systems down to the hardware level, it’s very helpful to have a few on hand in case something goes wrong. In the case of cloud, the vendor’s high-end support will often jump in as you may not have direct access to all the lower layers.

Failure in this context doesn’t just include functional issues, but also performance issues. Just like functional errors bubble up through the layers of abstraction, so do performance issues like saturated network segments or low-level race-conditions.

Grow Your Impact as an Architect

The Software Architect Elevator helps architects and IT professionals to take their role to the next level. By sharing the real-life journey of a chief architect, it shows how to influence organizations at the intersection of business and technology. Buy it on Amazon US, Amazon UK, Amazon Europe